INTRODUCTION

This monograph is now entitled The Shape of Tomorrow. The first part of which is devoted to the profound transformation that I discern in the very structure of knowledge and how humans relate to it. I have identified seven such major transformations and I have taken to referring to them as the “Seven Pillars of the Knowledge Revolution”, and the second part is devoted to the implications of the profound transformations that I have mapped in the first part. I am convinced that the cumulative effect of these transformations is the most profound revolution since the invention of writing, which enabled humanity to preserve acquired knowledge and pass it on through time and space. This is not hyperbole. I think the evidence that I will marshal will support that dramatic view.

Given that there were seven pillars, I was tempted to call the monograph “The Seven Pillars of Wisdom”. For those with a literary or historical inclination, that title would have reminded them of the memoirs of T. E. Lawrence

[1]. Better known to the wider public as “Lawrence of Arabia”, Lawrence was a British officer who helped foment the Arab revolt against the Turks during the First World War.

But I chose not to invoke that title, not because there is anything wrong with another scholar using the same title for another work almost a century later, but because upon reflection I felt that what I was writing about was knowledge and not wisdom. Data when organized becomes information, and information when explained becomes knowledge, but wisdom is something else. It requires combining knowledge with prescience, judgment and the patina of experience.

And on reflecting on the subject at hand, I was confronted with the very real difficulty that the new Knowledge Revolution that we are witnessing is raising a lot of questions and opening up many possibilities that I can only hope that humanity will have the wisdom of addressing well. Hence, my sweeping title, and the hope that is built into tackling that subject, that these seven pillars and their implications will turn out to be not just a knowledge revolution, but the start of wisdom… But humility would have us ask, as T.S. Eliot did a century ago:

Where is the Life we have lost in living?

Where is the wisdom we have lost in knowledge?

Where is the knowledge we have lost in information?

-- T.S. Eliot

So, having chosen to speak of The Shape of Tomorrow, I invite you to read on, and to share my wonder and admiration, my concerns and my misgivings, and above all to be infected by the excitement of the times, and the fantastic explorations that lie ahead, that will transform forever our views of ourselves and the universe that surrounds us, that will change the very structure of knowledge that we delve into, manipulate, and add to in the hope of creating better tomorrows.

THE SHAPE OF TOMORROW – PART ONE

THE SEVEN PILLARS OF THE NEW KNOWLEDGE REVOLUTION

PROLOGUE:

We are on the cusp of a profound transformation of how knowledge is structured, accessed, manipulated and understood, how it is added to, and how it is displayed and communicated, that is the most profound transformation in the history of humanity since the invention of writing.

Is this an overstatement? I think not. I believe the observations that I shall outline hereunder justify that sweeping characterization.

There are seven main features of the knowledge revolution that I speak of can be characterized as seven pillars of the new knowledge revolution. A lot of people have spoken of the ICT revolution as the “Knowledge Revolution”, with a focus on the enormous increase in knowledge available to all people, the fantastic increase in communications between people and businesses and the resulting emergence of the knowledge based society and the technologically based economy, with the well-known and well-documented aspects of globalization overlaid on this transformation. Here I am speaking of the structure and presentation of knowledge and how we humans will most likely be interacting with knowledge, whether we are academics or researchers or simply the descendants of those who used to go to public libraries and ask the librarian for assistance with a good book to read or a reference source for the paper they are preparing for college. It is this that I refer to as the “New Knowledge Revolution”, and whose seven key characteristics, which I like to call “pillars”, I describe here. These are:

· Parsing, Life & Organization

· Image & Text

· Humans & Machines

· Complexity & Chaos

· Computation & Research

· Convergence & Transformation

· Pluri-Disciplinarity & Policy

A word about each of these seven pillars is pertinent here:

FIRST: PARSING, LIFE & ORGANIZATION

Since the beginning of time, whether we were writing on scrolls or on codexes or the codexes were printed or manuscripts, the accumulation of knowledge was based on parsed structures, with units put next to each other like bricks in a wall or an emerging structure.

By the 17th century we had defined a convention on how to organize that knowledge in the parsed unit, namely:

· An introduction and problem statement

· An identification of the sources

· An identification of the methodology to be used

· Marshaling the evidence

· Analyzing the evidence

· Interpreting the findings

· Conclusions

To that list we sometimes also added a survey of the literature. Classical scholarship developed a formidable array of tools: bibliographies, lists of references, footnotes and endnotes to give credit and standardize presentations and citations. Style sheets and style manuals were developed and adopted.

Whether the piece was to be published in a journal or in a book or as a monograph or a standalone book, the general structure was the approximately the same even if the length varied.

It was the juxtaposition of these individual parsed works that created the accumulation of knowledge… the rising edifice built piece by piece, brick by brick or stone by stone…

In addition each piece was “dead”. By that I mean that once published it stayed as it was until a second edition would appear. If we both had copies of the same book, we could both open to, say, page 157 and find exactly the same thing in our respective copies. It did not change whether we did it immediately after the book appeared or decades later.

The Internet changed all that…

The web page became the unit of parsing. Instead of the classical sequence of presentation, we now think in terms of a home page and then hypertext links into other related documents.

Hypertext is an old technology, dating from the early 1990s. We can expect more fluidity into the merging of image, both still and video, and the transitions from one reference link into another.

The material being posted on the web today is different. It is “alive”! By that I mean it is being changed all the time. Updating, amendments and linked postings, make this material—unlike the traditionally published material – come alive. Today if I look up a web page, and you look it up, at the same location a few hours later, it will probably have changed, since the material is constantly being updated.

Search engines complement the World Wide Web. Their lightning-quick ability to find thousands of relevant objects to match the words being searched for, have made the on-line material accessible and retrievable. Furthermore, as we move beyond the current structures of the web, towards the semantic web, where we can search for relationships and concepts and not just objects, the structure of organization and presentation of knowledge will become one large interconnected vibrant living tissue of concepts, ideas and facts that is growing exponentially and which will require new modes of thinking to interact with it. It will automatically spawn these new modes of thinking and scholarship will be no longer be parsed like bricks in a wall, it will be more like a smooth fluid flowing river.

In fact, today, “Web Science” is becoming a domain its own right. It is a new theme emerging just to address the issues relating to multi disciplinary, semantic web, linked data, etc .. There is now a trust for that (

http://webscience.org/home.html) and a series of conferences have been started (

http://www.websci10.org/home.html). Actually, it is one of the exciting areas of contemporary computer science.

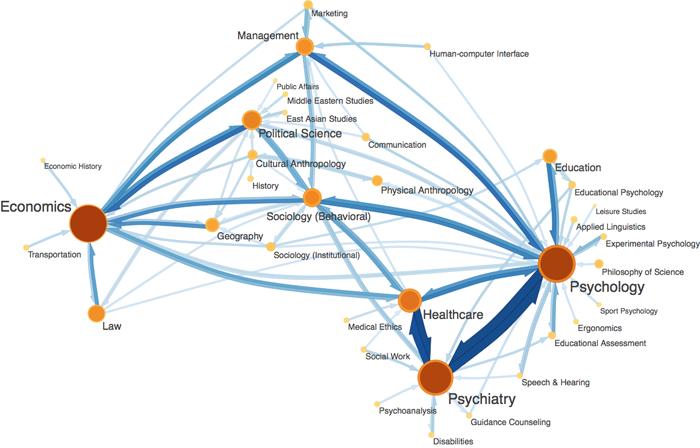

But harking back to the conventional definitions of the humanities and the sciences, we can now envisage a new way of organizing knowledge based on the intensity of the links between the subjects. Here are two excellent diagrams, posted at

http://www.eigenfactor.org/map/maps.htm covering both the Social and natural sciences presented as diagrams of interactivity, not as taxonomies of subjects, headings and sub-headings. They also have the virtue of representing the intensity of the links by visually representing them as thicker connections in the diagrams, and they also show the size of the overall links and their directionality.

In both maps, orange circles represent fields, with larger, darker circles indicating larger field size as measured by Eigenfactor score™. Blue arrows represent citation flow between fields. An arrow from field A to field B indicates citation traffic from A to B, with larger, darker arrows indicating higher citation volume. To study these maps, please refer to the details at

http://www.eigenfactor.org/map/maps.htm [2]

[1] It is true that my title is evocative of that work, but then Lawrence himself had really taken it from the Book of Proverbs, 9:1: "Wisdom hath builded her house, she hath hewn out her seven pillars" (KJV). It is not very clear why he used that title for his memoirs, since he had started before the War to write a book by that title, which was about seven cities of the Middle East. It was never completed and the manuscript of that work did not survive.

During the war, while Lawrence was active with the Arab Revolt of 1917–18, he had based his operations in Wadi Rum (now a part of Jordan), with spectacular rock formations all around. Apparently, one particularly impressive rock formation in the area was named by Lawrence "The Seven Pillars of Wisdom". Whatever the reason, Lawrence decided to use this evocative title for his memoirs, written after the war. So the title “The Seven Pillars of Wisdom” would forever be associated with the memoirs of T. E. Lawrence.

[2] The maps were created using the Eigenfactor information flow method for mapping large networks: For the natural sciences map, the authors state: “Using data from Thomson Scientific's 2004 Journal Citation Reports (JCR), we partitioned 6,128 journals connected by 6,434,916 citations into 88 modules. For visual simplicity, we show only the most important links, namely those that a random surfer traverses at least once in 5000 steps, and the modules that are connected by these links”.

For the social sciences map, they state: “Using data from Thomson Scientific's 2004 Journal Citation Reports (JCR), we partitioned 1431 journals connected by 217,287 citations into 54 modules. For visual simplicity, we show only the most important links, namely those that a random surfer traverses at least once in 2000 steps, and the modules that are connected by these links”.

See

http://www.eigenfactor.org/map/maps.htm. Thomson Reuters (Scientific) Inc. provided the data used in calculating the Eigenfactor™ Score, the Article Influence™ Score, and other analyses. (©2009 Carl Bergstrom | site design by: ben althouse).

Diagram 1: The Social Sciences:

Diagram 2: The Natural Sciences:

If one were to try to take into account as well the emergence of the social linkages phenomena that the internet and the web have now made possible, it is interesting to try to map out the where all the different applications may lie in a four cell space that maps degree of scoial connectivity and degree of knowledge connectivity. Such a diagram has been done by Nova Spivak at

www.mindingtheplanet.net and is reporduced here. She also interprets the impacts of these applications, and defines the space that she names the Meta-Web, with high knowledge connectivity and high socialconnectivity, and sees in it the connectivity of intelligence.

Diagram 3: The Meta Web

SECOND: IMAGE & TEXT

Throughout history, the primary means for the transmission of information has been text. Images were difficult to produce and to reproduce. This has changed. With the digital revolution, everybody can record images, both still and video, and computer generated graphics are becoming affordable by everybody. Billions of images are being posted on the web, and such sites as Flickr and You Tube showed how the public at large could contribute to this enormous stock of visual materials.

The human brain can process visual information with enormous rapidity. Thus one can open a door and look into a room for a second and then be able to largely report on the size of the room, its furniture, the color of the walls, the presence of windows and the whether the table at which si people, two men and four women, were sitting was a metal or wooden table and whether the chairs were leather or plastic and so on… enormous detail captured and processed in a fraction of a second. If one were to convey that with text it would take several pages to give the same accuracy of rendering that a passing glimpse can achieve.

See this lament from d’Alembert in preparing the famous Encyclopédie in the 1770s…

But the general lack of experience, both in writing about the arts and in reading things written about them, makes it difficult to explain these things in an intelligible manner. From that problem is born the need for figures. One could demonstrate by a thousand examples that a simple dictionary of definitions, however well it is done, cannot omit illustrations without falling into obscure or vague descriptions; how much more compelling for us, then, was the need for this aid! A glance at the object or at its picture tells more about it than a page of text.

d’Alembert, Jean Le Rond. Preliminary Discourse to the Encyclopedia of Diderot. Translated by Richard N. Schwab. Indianapolis, IN: Bobbs-Merrill, 1963, p.124. Cited in The Culture of Diagram, John Bender and Michael Marrinan, Stanford University Press-Stanford, CA, 2010, p.11.

True, image is more efficient. But text is different. It activates interaction between the reader and the writer. The result is a joint construction. Text works on the basis of three interlocking abstractions. A letter is an abstraction, which we recognize visually and identify, then combinations of letters form words, and combinations of words form sentences. We ascribe meaning to the words and sentences. Out of that we interpret the text description of the room in question into a mental image. In a sense, that is the difference between reading a novel and seeing the film made out of the novel.

So some new features of the current knowledge revolution appear imminent. One, is the far larger reliance on image – in addition to text – in the communication of information and knowledge and the changing forms of the storage and retrieval devices that this will require as we move from text dependent book and journal to digital still and video image presentations as well as three dimensional virtual reality and holographic presentations. Interactivity will also become a feature of this new image-based virtual-reality world. Again what does that mean in terms of the presentation, the search and retrieval functions and the interaction between the researcher and the material in the future?

Second: There was an observation that among the population at large, text in print acquired a greater credibility than oral communication. Experience and a rising cynicism allowed us to start doubting the material that was printed in newspapers for example. In other words, just because it is printed does not mean that it is true. But we tended to accept the authority of pictures. If someone said that x had met y in a certain location, and showed you a picture of x with y at that location, that tended to be the ultimate proof. Nowadays, with Photoshop and much more sophisticated software programs and techniques to manipulate images, one is no longer sure as to how credible these images are.

Third: it is not clear how the image and text aspects of knowledge formulation and transmission will play out. The future is bound to be interactive (much as the “dead” text will become “live” text as discussed in the first of the seven pillars). Interactivity will create a whole new experience in the treatment of knowledge and how it is experienced by the reader/user.

Whatever we think it will do in terms of dealing with abstract thinking and the ability to develop the interpretative capacity of the reader/user, image and text will intermingle as never before, with image taking on a more and more preponderant role over time.

But in support of image, it is clear that it can do many things that truly help humans acquire and interpret knowledge. For example, it image representations of large data sets help us determine patterns much more easily and compare data sets through the patterns they generate. Graphs of socio-economic data are among the simplest forms of such images, while multidimensional representations using virtual reality animation to add changes over time can be extremely enlightening.

Maps and diagrams enable us to make sense out of material that would be impossible to describe effectively. In the preceding discussion of the first pillar, I have used maps/diagrams of the web and connectivity, as well as the linkages between the social and the natural sciences. These diagrams summarized efficiently enormous amounts of information that would have been difficult to convey in some other way. Or consider a map/diagram of the subway system of a large city such as Tokyo or Paris. It is impossible to rely on text to convey this amount of information, while a small single sheet of paper with a simple diagram does the job exquisitely.

Images, both still and video, allow us to experience and see things from angles that we are never likely to be able to see for ourselves. Images of astronauts on the moon, or the image of the earth from space, have enormously enriched humanity as a whole, by allowing not only a vicarious pleasure in joining the astronauts on their quest, but also by truly educating us about the marvel that is our beautiful blue planet. That picture of the earth from space remains the most downloaded image from the internet to this day of writing this essay.

Images also assist us to “see” the world in different ways. With x-rays, scans and similar imagery we see beyond the visual range of humans on the spectrum. Heat sensitive photos show us everything from tomography for humans to battlefield conditions that show heat signatures of living creatures in the dark. We can map through such imagery the firing of neurons in the brain and see which parts of the brain we use to listen o music and which parts we use to solve math problems or to enjoy a conversation with another human. This extension of the range of human sight, through the development of image technology into the UV and IR ranges of the spectrum can have profound implications for how we understand the world around us.

Our ability to interpret data is expanded by many orders of magnitude through pattern recognition and simulation in 3-D or virtual reality. Not only can we handle enormous amounts of data, but the models can enhance certain features to enable us to study them better. For example, by simulating the flows of wind and sand on a monument like the Great Sphinx of Guiza, when mapping these from the different orientations and durations hat they are likely to come on a typical year, we can study the effects of erosion on the hundreds of thousands of data points that make up the body of the sphinx, and generate maps of the most likely areas of the statue’s enormous body would be most a t risk of erosion effect and give us leads as to the parts that require the most frequent monitoring. In addition, computers can modify colors to give us more sensitive ability to differentiate between different values, and can interact with the data much more profoundly in virtual reality.

So what does all this mean for the effective description in meta-data, the storage, searchability and retrievability of this enormous and growing world of still and moving images, both fixed and interactive?

Systems based on pattern recognition appear imminent and promising. Already, Google offers services where you can show a picture of a location and get details about where you are and information about the location or the building or landmark in the picture. Much more of this is in our future. We will no longer be looking up images through key words entered into text data bases such as meta-data catalogues. Computers will do this for us.

THIRD: HUMANS & MACHINES

With the exception of pure mathematics and some aspects of philosophy, it will no longer be possible for any human to search for, find and retrieve, and then manipulate knowledge in any field, much less add to it and communicate their own contribution, without the intermediation of machines. Even in literary criticism and the social sciences, the stock of material to search through can no longer be done manually.

Pure mathematics can still be done with a pencil and paper or a chalk board writing and thinking about equations… Philosophical reflections on the meaning of life and the purpose of the universe will always be possible with a certain detachment from the body of knowledge already available. But for anything else, it will not be possible.

This is not good or bad. It just is.

On one level, the machines will vastly expand the scope of our ability to grasp ad manipulate knowledge, on another the technology they represent will increasingly be interactive with te formulation of new scientific concepts. In other worlds, technology will spawn new ways of understanding and will generate new scientific insights. These in turn will help spark new technologies, which will again contribute to the enhancement and acceleration of the process of scientific discovery. This spiral has been accelerating and will continue to accelerate.

In fact new technologies in one field may lead to entirely new discoveries in other fields. Consider the new biology. It would have been impossible to imagine a few decades ago. Without access to high speed computers, it would have been impossible for a human being to list the three billion letter of the human genome and then put another such list next to it and identify and single nucleotide polymorphism (SNP).

What about Artificial Intelligence (AI)? The holy grail of many computer and robotics designers, AI has been an elusive goal for a long time ever since Alan Turing designed the famous “Turing test” to determine the intelligence of machines, and wrote his landmark paper on Intelligent machines

[1].

Systematic efforts at seeking AI probably started with Marvin Minsky and colleagues who coined that term in a famous Dartmouth conference in 1956. Debates raged, with some arguing that it was impossible, with a famous thought experiment by John Searle, the so-called “Chinese Room” analogy, trying to show that computers would forever remain captive of the programs written for them. Dramatic improvements in Hardware and gradual improvements in Software led to demonstrable successes in complex tasks, such as when a special chess playing program called Big Blue of IBM defeated world champion Gary Kasparov in Chess in 1997.

Ray Kurzweil and other visionaries have been arguing that “consciousness” and “intelligence” are emanating qualities from very complex systems, and that we are going to witness that happening with machines when they pass certain thresholds of complexity and power, such as when the level of the processing power reaches certain sizes, and software advances within a decade or so after that to certain levels, all of which is likely to happen within the first half of the 21st century.

But whatever the merits of that particular debate and its ramifications, it is clear that changes are already noticeable in the domain of libraries and the internet. There are a number of new initiatives, all of which the Bibliotheca Alexandrina (BA) is actively involved in, which demonstrate the contours of things to come. They are a “preview of coming attractions” in the movie parlance of yesteryear!

Wikipedia, the brainchild of Jimmy Wales, demonstrated that you could bring together some 100,000 people who do not know each other from all over the world to collectively construct the world’s largest encyclopedia within five years! An impossible feat to imagine a few years ago. Furthermore, Wikipedia shows all the features of a living text, constantly being added to and amended and expanded, as discussed under the heading of the first pillar, even though it still retains the classical presentation of the arguments in its articles. The BA is encouraging the Arabic language contributors to the Wikipedia, and hosts their events whenever they request it.

New frontiers are being broached by the Encyclopedia of Life (EOL) a consortium of Natural History Museums to document all of biodiversity. Here every species, through an infinitely expandable website, every one of the known and scientifically identified 1.9 million organisms, would be given a web page, that follows certain layout and presentation system. Behind that is the Biodiversity Heritage Library, defined by a consortium of 18 great university and research libraries that will pool together some 300-600 million pages of biodiversity literature. The enterprise here is truly enormous, and could not be conceived without the modern flexible and infinitely adaptable technologies of the web. Here the beginning of parsing by website and related material has become apparent. The BA is setting a mirror site for the EOL in order to serve users in the region. Also it is working on the internationalization of the EOL and adding an Arabic component to the system.

Emerging from the need to avail accessible sources of knowledge and aiming to address the scarcity of scientific material in various spots around the world, the BA Science SuperCourse project started out in 2006. Activated by a “Community of Practice” who use the materials and evaluate them, the SuperCourse offers more than 3,600 PowerPoint lectures in epidemiology and preventive health for Internet users for free. During the past year, the SuperCourse materials were used by 60,000 teachers in 175 countries to reach about one million students. Today, the BA with its partners from Pittsburgh University focuses on extending the scope of the SuperCourse to cover four major fields of science; Environment, Agriculture, and Computer Science in addition to Health.

The new Science SuperCourse offers users a variety of functionalities reinforced by effective dissemination tools, convenient user access and an efficient retrieval system. The system has been built on Web 2.0 technologies, aiming to further the engagement of the community allowing individuals to rate lectures, submit comments, negative reports, or even participate in filtering the content of the repository.

On another level, the Science SuperCourse is a meeting point where experts share their knowledge and their best lectures by uploading them onto the system to be shared with others worldwide. As such, networking is the main factor of success of the Science SuperCourse, and here we can see that the earlier discussion on the first of the seven pillars, that combined the expansion of knowledge connectivity to the social connectivity and networking made possible by the web is being already reflected in the reality of the past experience and future prospects of the BA Science SuperCourse.

Universal Networking Language: The Bibliotheca Alexandrina (BA) is taking part as one of the vital partners in the Universal Networking Language (UNL) program, initiated within the United Nations. The mission of the program is to break language barriers between cultures in the digital age and to enable all people to generate information and have access to cultural knowledge in their native languages.

UNL is an artificial language attempting to replicate the functions of natural language in human communication. It is based on a certain workflow, where a natural language is “enconverted” into the UNL and then it is “deconverted” back into the target natural language using the UNL system tools. Currently, 15 languages have been incorporated and a number of institutions have started to work on their respective native languages. The UNL project is one of the main areas of research at the BA, where we are building and designing the Arabic Dictionary in the UNL system which contains to date more than 140,000 entries and more than 80,000 concepts, and the International Corpus of Arabic (ICA) which has some sixty million words, each of which has some 16 linguistic parameters attached to it.

If successful, this program incorporating both the hub (UNL) and the spokes (natural languages) will allow even small languages to be incorporated into a system where native speakers can access the growing stock of material in other languages, especially scientific material, which is less likely to use simile, metaphor, sarcasm and ambiguity that one finds in literature and poetry for example.

World Digital Library: The idea of creating the world digital library was initiated by the Library of Congress aiming at bridging the world cultures. It comprises a variety of digitized material (manuscripts, maps, rare books, films, sound recordings, prints and photographs) provided to Internet users with un-restricted access, free of charge, in 7 languages. In April 2009; the World Digital Library was launched at the UNESCO Headquarters in Paris.

The system allows one to link video, image text and commentary and maps into one seamless whole and to be able to search by many different approaches (time, geography, theme, cluster, or even by a single word) and browse the material as well as find what one wants from the digitized material on offer from all the countries of the world.

Having done a similar many program for the Memory of Modern Egypt, the BA has become one of the five institutional founders, and the BA is assisting in designing and implementing the architecture of the WDL and contributing with its particular expertise in the search and display of Arabic texts. Currently, the BA is co-chairing the Technical Architecture Working Group which covers architecture, workflow, standards, site functionality and technology transfer.

The WDL started with 26 partner institutions from 19 countries at the time of launch, while currently there are 69 partner institutions from 49 countries, and growing. The increasing quantity and diversity of content on the WDL will make it a true World Digital Library that emphasizes quality of content (e.g. a focus on making available digital versions of collections on the UNESCO Memory of the World register), quality of connections, curating and interactivity, and promotes cooperation among the world’s digital library initiatives.

Other considerations: Gaming comes of age:

Another area that is transforming the interaction of humans with machines is the emergence of a whole generation brought up on computer games, or video games as they are sometimes called.

Originally invented by a serious scientist, Higinbotham at Brook Haven National laboratory, and truly expanded by Baer and Bushnell in The 1970s, video games spawned an entire industry. But with the advent of the Internet the game based on one person alone interacting with the machine, or two players competing with the machine providing the platform, was to change. Henceforth gamers could play with many other players on line and pretty soon veritable communities developed in cyberspace. New advances kept a pace, to make the experience more real, more participatory. Wii controls brought a new and different level of involvement. But the interesting aspect for our discussion here was the formation of communities of gamers.

These communities formed to play created complete and convoluted games, some of which were very bizarre by conventional mainstream thinking, but alternating fantasy and violence and eroticism, the games opened avenues for role playing as much as game playing.

Creating avatars in entire parallel worlds became possible and phenomena such as second life and other cyberworlds were created. They even developed their own currency of exchange and real corporations began to take a footing in this make-believe world, doubtless hoping that there would be a carry over to the real world.

But gamers were not only developing good eye-hand coordination, they were also developing a great deal of familiarity with the complex programming of video games. A number of games started inviting their Players to develop the many “embedded” surprise gifts programmers would leave in the original games. This soon developed a new kind of interactivity between the individual and the game. The gamers developed their own customized versions of the game, and the companies gave them tools to help them to do so.

This created a new generation of users/producers who are comfortable with the machines they use and with the idea of programming them to do their bidding. This is best visualized by seeing how grandchildren help their grandparents to program their cell phones. The dexterity of the young and the awkwardness of the old speak volumes about how this technological transformation is taking root. We can only wonder at how this rapid change will morph the world we have known some thirty years from now.

FOURTH: COMPLEXITY AND CHAOS:

The world we live in is a remarkably complex. The socio-economic transactions of a globalizing world are exceedingly complex as, with the click of a mouse and the flight of an electron, billions of dollars move around the planet at the speed of light. The web of interconnected transactions is enormous, and the ripple effects of any single set of actions and its interaction with other effects is difficult to predict.

Our cities have become not only much larger but also much more complex, as the economic base move inexorably to a much larger share of our national income being generated from services rather than manufacturing.

Ecosystems are not only delicate, they are intrinsically very intricate. Slight disruptions somewhere can have catastrophic effects elsewhere in this interconnected web of life. Our climate system, is proving extremely difficult to measure and model, given the enormous number of variables and data points that must taken into account.

Biological systems, even at the level of the single cell, are incredibly complicated, and we keep discovering the full measure of our ignorance with every new discovery we make. Life is more complex than we thought, and time after time, our explanatory biological models prove to be too simplistic compared to the complicated reality of nature.

The reality is complex and chaotic, meaning that complex systems have non-linear feedback loops that result in systems and subsystems that are extremely difficult to predict. Many of our models, based on the simple mathematics and analogies drawn from physics, are proving inadequate.

Some, such as Benoit Mandelbrot, have created Fractals and other the logistic map to model these realities. Others speak of agent-based modeling. Still others, such as Stephen Wolfram, argue for Cellular Automata as the solution to our problems. Whatever the ultimate reality, it is clear that we will need new math, and novel statistical concepts to deal with these kinds of problems. That should not be surprising. In the past, Newton invented calculus to present his celestial mechanics, and Einstein used non-Euclidean geometry and the method of tensors to develop relativity.

FIFTH: COMPUTATION & RESEARCH

The success of modern numerical mathematical methods and software has led to the emergence of computational mathematics, computational science, and computational engineering, which use high performance computing for the simulation of phenomena and solution of problems in the sciences and engineering. These are considered inter-disciplinary programs.

But a profound shift is underway. Till now, Computing has been largely seen as the extension of a large calculating machine that can do dumb calculations at incredible speeds. Computer scientist and engineers were implementers who made the life of the creative people and the researchers less tedious. Wonderful tools, no doubt, but just tools all the same. Today, the concepts and the techniques of computing will become a central part of the new research paradigm. Computational Science Concepts, tools and theorems will weave into the very fabric of science and scientific practice.

Consider data management. Data when organized becomes information. Information when explained becomes knowledge. That in turn, when coupled with reflection insight and experience may lead to wisdom, but that is another story.

We have been collecting enormous amounts of data. These collections of data were organized in collections, and a large part of many sciences was dependent in the past in building up such collections and then generating knowledge from their analysis and interpretation. But in our increasingly complex world today, not only have the collections become huge and thereby require particular skills at management and organization, but also searchability and interpretability. It is the computer scientists who have been developing the skills to do handle such matters. They are the ones who can address the end-to-end data management problems, so that we can imagine “End-to-end science data management” covering:

· Acquisition

· Integration

· Treatment

· Provenance and

· Persistence

· And much more…

But beyond the scale and magnitude of the collections of data, we are looking for connections between collections of data. These pose particular problems that involve qualitatively different issues. Computer science is where the most work on such classes of problems has been done.

Because of all this, it is urgent to reconsider the role of computer science, both its computational and information science parts, and recognize that just as the research paradigm will require new kinds of math and novel statistics, computer scientists will weave into the very fabric of science and scientific practice their insights begotten from information theory and from computing mathematics and techniques.

Computational

Information Sciences, based on the work of Shannon and others

[2],

[check] will provide powerful conceptual and technical tools to be used in biology, for example, if we try to model the functions of a living cell as an information flow system. The study of information science is central to that view. In cosmology and physics, the rhythms of the universe are seen by some to be decodable by the insights of information science.

Computational concepts will also be seen as an integrative force for the new knowledge revolution: for they provide levels of abstraction that will allow scientists from different disciplines to more easily work together, and they will help develop the science computing platforms and applications that will be increasingly integrated in both experimental and theoretical science.

[1] Cf. Turing, A.M. (1950). “Computing machinery and intelligence”.

Mind, 59, 433-460. It should be noted that Turing as a young man is credited with having invented the field of computing and formulated the idea of computing machines (Turing machines), see his classic Turing, A.M. (1936). "On Computable Numbers, with an Application to the Entscheidungsproblem".

Proceedings of the London Mathematical Society 42: pp. 230–65. 1937. Working to decipher german codes during WW2 he is also credited with having participated in building the first modern computer.

[2] Cf.

inter alia, Claude Shannon, "A Symbolic Analysis of Relay and Switching Circuits,", MS Thesis, Massachusetts Institute of Technology, Aug. 10, 1937. And Claude Shannon, "A Mathematical Theory of Communication", The Bell System Technical Journal, Vol. 27, pp. 379–423, 623–656, July, October, 1948.

Diagram 4: The New Research Paradigm:

Computation is also learning from biology and physics. Indeed two totally different and totally new fields are now developing, dealing with Quantum Computing and DNA Computing, they explore how such quantum computers and DNA computers could be built. Both intend to address the issue of the limits of silicon-based conventional computing as we reduce the scale to the atomic level and increase the speed of the arrays.

Among the topics also being explored in biology that may well have significance in computer design for the future, is the development and evolution of complex systems, resilience and fault tolerance, and expanded adaptation and learning systems.

Meanwhile, John Koza, a rich entrepreneur who teaches at Stanford, pioneered “genetic programming” for the optimization of complex problems. Genetic Programming is also known as Evolutionary Programming. Just as in biology, random mutations in the many lines of code that constitute the genome can have effects on the organism, and nature can filter the effective ones from the ones with negative impacts by the filter of the environment, so too can a program be subjected to many random alterations that would be filtered by a template for effectiveness of the program against the stated problem objectives. If it is more effective it becomes the new standard, and is subjected again and again to the cycle of random mutation and filtering, till it optimizes the performance way beyond what had been originally conceived by the programmers.

So, Genetic (Evolutionary) Programming alters its own code to find far more complex solutions, that are more efficient than the original one devised by humans. While this approach does not lend itself to application on many classes of problems, it has already shown itself to be remarkably effective in dealing with some problems, and has successfully been used by Koza and his team to create antennae, circuits, and lenses. Koza calls it the "invention machine", and it has received a patent from the US Patent Office.

SIXTH: CONVERGENCE & TRANSFORMATION

Domains are gradually converging. In simplest terms, once upon a time we had chemistry and biology as distinct and separate enterprises, now we have biochemistry. Such moments of convergence, generating new sciences and insights, turn out to be some of the most fecund moments in the evolution of our knowledge and the development of our technologies.

Today we are witnessing the convergence of three hitherto-separate fields with the birth of BINT: Bio / Info / Nano Technology.

At the same time, we need to develop what the NSF calls Transformative research. That is research capable of changing the paradigm in some fields and domains, such as Synthetic biology and femto-chemistry. Such research is extremely valuable. Thus we witnessed the discovery of the structure and mechanism of DNA engender entire fields like genomics, proteomics and metabolomics.

A question before is, is whether such developments will remain serendipitous or will our research paradigm systematically force the development of such converging domains and transformative insights? I believe we are poised to do the latter.

SEVENTH: PLURI-DISCIPLINARITY & POLICY

There is real value in crossing disciplines. Increasingly, both in academic organization and in tackling real-life problems, we note that the old “silos” of disciplines are counterproductive. Much of the most interesting work is being done in between the disciplines, where they intersect or where the gaps are. Increasingly we recognize that our real life problems, such as poverty, gender or the environment, are all multi-dimensional and complex and require a special way of organizing all the various disciplinary inputs. Just as we say that diversity is enriching, so is the sharing of knowledge across disciplines.

Already we have many examples in top level universities: Columbia’s Earth Institute, and Stanford’s BioX complex with its shared labs, flexible layouts and ample meeting space for brainstorming and discussion. More radical departures are on the horizon, from Olin College of engineering (founded in 2001 in Needham Massachusetts) which abolished departments for its 300 students, to the enormous new KAUST which has replaced traditional departments with just four interdisciplinary research institutes: (i) Biosciences, (ii) Materials sciences, (iii) Energy and the environment, and (iv) Computer sciences and math.

In addition, one can note the emergence of the problem-based approach in medical education, which was pioneered at McMaster but taken up rapidly by other places like Bahrain (Abdallah Daar). It was based on the same educational philosophy: they too did away with departmental structures and adopted Inter-disciplinarity in a big way.

Whether these are just fads or the wave of the future will soon become apparent. But it is clear that greater inter-disciplinarily is the hallmark of the future, especially in trying to bring the knowledge of research to bear on the real life problems of today and tomorrow. This will not diminish the fact that it will be accompanied by pointed and very specialized research on particular problems.

The nature of the challenge, its scale and complexity, requires that many people have interactional expertise to improve their efficiency working across multiple disciplines as well as within the new interdisciplinary area. At present there are three major ways of organizing joint work between the disciplines: Inter-disciplinary, Multi-disciplinary and Trans-disciplinary.

Inter-disciplinary: This is more of a team approach to organizing intellectual inquiry in an evolving field. Aspects of the challenge cannot be addressed easily with existing distributed knowledge, and new knowledge becomes a primary sub-goal of addressing the common challenge.

Multi-disciplinary: This is where disciplines joined without integration. Each discipline yields discipline-specific results. For example: the analysis of various aspects of the AIDS pandemic (medicine, politics, and epidemiology) where each section stands alone.

Trans-disciplinary: This is the case where a certain philosophy or conceptual construct dissolves boundaries between disciplines and overlays approaches from different disciplines. For example: The Marxist approach to disciplines such as art history or literature, thus applying philosophies, insights and methodologies of sociology, economics, politics, etc. to these areas.

Whichever one of these pluri-disciplinary approaches, we will need more of them to address our new realities, and not just within the natural sciences, but also crossing to the social sciences and the humanities. However, the increasing complexity may well be pulling in the other direction of increasing specialization at the tips of the knowledge disciplines, except when they confront transformative research or convergent technologies.

EPILOGUE

General implications: It is clear from the preceding that we are entering a new age where the production and dissemination of knowledge, its storage and retrieval, its understanding and manipulation, its interpretation and reinterpretation, its integration and reinvention, all necessary parts of a functional cultural legacy and a dynamic cultural scene, will be different. If the diagnosis is correct, then we should be thinking from now as to how to design the infrastructure of knowledge in our societies to take into account The Seven Pillars of the New Knowledge Revolution, as I have chosen to call them, and their implications. By the infrastructure, I mean the education system from pre-school to post graduate studies; the research institutions in universities, independent labs and in the private sector,; and the supporting structures of knowledge and culture that are libraries, archives an museums. It is the anticipation of these developments in the design of our institutions today that will make the transitions into the future world of tomorrow easier, more productive and less stressful. Letting things run their course will not change the future, but it will make the transitions more stressful and possibly, in some cases, even wrenching.

Some additional Questions: This quick sketch of the seven pillars of the new knowledge revolution raises many questions that deserve thoughtful reflection. We can see, for example, that heuristic reasoning, so important for those creative leaps of the imagination, will be helped by machines but limited by complexity. Will it become more frequent or rarer? Likewise, some see the need to focus the next generation of software design on developing tools for pattern recognition, analog programming and non-linear approaches. What will be the role of evolutionary programming and the role of human innovation in these developments? Is there a special role for cellular automata as Stephen Wolfram would want us to believe? Finally, how do we encourage young people not to limit themselves to searching within the boundaries of the known paradigm, and to break out beyond the known?

ENVOI:

Are we capable to anticipate the likely changes that that the seven pillars of the knowledge revolution will wreak? Will be able to work out the institutional arrangements that these changes will require, and anticipate these implications? I think not. Only with hindsight will we know the measure of that success. At present, we can only raise questions and express hopes…

Can we even claim to have properly sketched out the full range of implications that the seven pillars of the new knowledge revolution will force upon us? Do we know what the technologies of the future will do to our ability to summon the spirit of the past and conjure inspiring images to help us create a new future? Who can tell?

Yes, there are no complete or even fully satisfying answers to many questions implicit in the discussions above. But in this modern age, we are, to use the expression of Boorstin, “Questers”, who understand that knowledge and cultural expression are a journey and not a destination, and who recognize that there is more importance in the fecundity of the questions than in the finality of the answers.

THE SHAPE OF TOMORROW – PART II

SOME IMPLICATIONS OF THE KNOWLEDGE REVOLUTION:

General implications:

It is clear from the preceding that we are entering a new age where the production and dissemination of knowledge, its storage and retrieval, its understanding and manipulation, its interpretation and reinterpretation, its integration and reinvention, all necessary parts of a functional cultural legacy and a dynamic cultural scene, will be different.

If the diagnosis is correct, then we should be thinking from now as to how to design the infrastructure of knowledge in our societies to take into account The Seven Pillars of the New Knowledge Revolution, as I have chosen to call them, and their implications. By the infrastructure, I mean the education system from pre-school to post graduate studies; the research institutions in universities, independent labs and in the private sector, and the supporting structures of knowledge and culture that are libraries, archives and museums. But the most important and far-reaching impact will be on the book as we know it.

I. IMPLICATIONS FOR THE BOOK:

The most profound implications of the preceding discussion are for that foundation of the recording and transmission of knowledge for the last few millennia: The Book – the parsed, “dead” codex (book form) that relies mostly on text. Since the transition of written knowledge from scrolls to codex, it has been the mainstay of knowledge. The schoolbook is the basis for the formal instruction of children. The book is the mainstay of research and higher learning. And the cultural output of a society is frequently gauged by the number of important books that they have produced and continue to produce. It is true that, in the last century, the film and pictures and a stream of television programming have also become important elements in the gauging of a society’s culture and its output, but the book has continued to reign supreme in its capacity to signal permanence and lasting value. No more.

But the Book will remain, albeit in a different role. It is clear that electronic books will become the vehicle of choice to convey the parsed and organized knowledge that we will still want to retrieve in book form. The nature of the receptacle of choice to receive the electronic book from the internet will vary. Whether tablets (from the Sony E-reader to the kindle to the Apple iPad to the yet-unborn technical wonders of the future) or other handheld devices or the conventional screen of a computer, various people will probably be able to choose from various designs, as the material itself will become increasingly adaptable to being downloaded from the internet into endless variations of increasingly compatible devices. Consumer choices will continue to increase, but in substance, for the knowledge that will remain in book form, whether because it is inherited from the past in this form, or newly written in the coming decades, I do not doubt that it will be the electronic form of the book that will take over and dominate the market for books.

Some will retain their love for the book as artifact, and this writer is certainly one of them. And for such people, true bibliophiles, the book will continue to be produced, enjoyed and lovingly cherished. However, I suspect that we will be a rather small minority.

Accordingly, the entire industry based on the conventional ways we know of producing and distributing books and magazines will have to be radically transformed. We are witnessing that in music and will also see it coming to Video and Film.

But more subtle transformations are already perceptible. Two such aspects deserve mention here: the rise of electronic self-publishing and the emergence of preference software.

First: the rise of self publishing. Examples now abound of a number of authors bypassing the conventional approach to work through an agent and reach the established publishers to go directly to uploads on Amazon’s Kindle, soon to be joined by others. The numbers are growing at a phenomenal rate, as this market for non-traditional books grows exponentially, growing by an amazing 750,000 new titles in 2009, up 181% from the previous year. Five of the 100 bestsellers on Amazon are self-published, and Amazon’s Kindle Store now produces more business than its hardbound editions. (see Isia Jasiewicz, “Who needs a publisher?” in Newsweek, 9 August 2010, p.43). Print on demand, which should, over time, make sure that no book is ever out of print, is another wrinkle on how the staid business of book publishing for commercial profit is finally being affected by the new technologies.

All in all, it is clear that the early pioneers who focused on scholarly audiences and tried to harness the new technologies, like Project Gutenberg, and the e-brary, and the BA’s own DAR, are finally being joined by the commercial publishers who had until recently been reluctant observers of the revolution being wrought by Sony, Amazon and Google and now Apple.

The second issue is the emergence of preference software. Preference software is software that allows the website to help the user choose a product, in this case a book or a piece of music. Most of the preference software works by mobilizing the shopping patterns of the users. The most well-known of these is the Amazon.com software that hits you with additional titles once you have selected something for purchase, prefaced by comments like: people who brought what you are about to buy (or just did buy) also brought the following titles…. Or even, people who have browsed the titles that you just browsed have been interested in the following titles. Very few, such as Pandora.com in music, actually analyze music on the basis of detailed analysis and then searches for pieces that have the same patterns as the one you selected. But Pandora, with its more scientific approach based on the product, not on the crowd’s preferences, is a minority in the market, largely because of the complexity of undertaking the task and the need to have experts do the analysis of the pieces.

While many, including this author, find such preference software a useful service, we should be aware of the tendencies that it encourages. Basically, this will reinforce the tendency of sending the crowd towards the bestsellers rather than informing them of the obscure and rigorous gem here and there. Crowd pleasing bestsellers benefit enormously from this approach.

Likewise search engines from Google to Yahoo to Baidu, all tend to bring at the top of the list the most visited of the relevant websites. This, in turn, brings more viewers to these sites, and that then tends to further reinforce the current ranking of sites.

In political matters, it is clear that the new technologies by providing enormous choice for opinionated expression, finds crowds going to those writers or commentators who reinforce their prejudices rather than enlarge their horizons. Indeed birds of a feather tend to flock together. Greater political polarization in society is likely to be the result.

Will all this create a more opinionated, less tolerant and more narrowly educated and less broadly cultured society? Or will the technologies actually spawn many more possibilities that will nourish contrarian views and novel ideas? Both tendencies are therein our hands. It is difficult to predict which of these will prevail, but what is clear is that the world of book (and music and video) publishing will not be the same.

These major transformations are not for tomorrow. We will see a lot of the old linger on and co-exist with the new, but gradually the new will replace the old, and in the remote parts of the world, the new will take root without going through all the intermediate steps that we witness in the more advanced parts of the world that have been at the cutting edge of these technological transformations.

II. THE IMPLICATIONS FOR EDUCATION AND LEARNING:

Schools, universities and research facilities:

The structure of the institutions of education and learning, those that channel the preparation of future generations of humans and the trans-generational passing-on of knowledge, will change. They will not only continue to evolve, they will morph into something unrecognizable to those who think of yesterday’s schools as a model, or those who yearn for their collegiate university experience. The public and private laboratories and research institutes, those institutions that help in the production, assimilation and codification of current knowledge and the creation of new knowledge, will also change. However, the likely change of institutions of education and research are the topic for another discourse. Here, I will just say a few words on the more obvious likely impacts of the seven pillars of the new knowledge revolution on Schools, Universities and Research facilities.

Reinventing Education

I think that we need to think even more boldly and dream of reinventing education completely…

The old model of rigid linear advance through 12 years of schooling, followed by four years of university after which one receives a degree that certifies our entry into the labor force to practice some profession for forty years and then retire, will become totally obsolete. Continuous learning will be more than a slogan; it will be an economic necessity. The market will demand new skills, and an increasingly competitive world will force enterprises to continuously upgrade the skills of their labor force.

Education is likely to change profoundly in the coming decades, in terms of content, participants, methods, and organizational setting. Let us consider each of these in turn.

On Content, Curricula and syllabi need to be revised to emphasize basic skills, problem solving and learning to learn. Teachers must be much better trained to become enablers that will encourage children to realize the joy of discovery, and be able to utilize teaching methods that allow each individual to change at their own pace.

The educational system of the future will witness an explosion in Content, beyond our capacity to imagine today. People will emerge from their basic education – increasingly including university level education – having learned to learn, and having acquired a basic infrastructure of fundamental skills, including interpersonal skills and the ability to function in a society. These fundamental skills will be complemented by a vast array of offerings in every conceivable combination of units and modules covering everything from artistic expression to advanced genomics, from music appreciation to mathematics. The flexibility of these combinations will allow people to learn continuously throughout their lives.

New fields of learning will come about. The most important discoveries will be at the intersection of the existing disciplines. In the past we had biology and chemistry. Today we also have bio-chemistry, in addition to biology and chemistry. Totally new fields have come about, such as genomics and proteomics. And beyond the natural sciences we are discovering how important trans-disciplinary work is. We need the wisdom of the humanities in addition to the knowledge of the natural sciences. We need the insights of the social sciences to bear upon the technical options of engineering.

Participants in our educational enterprise will still involve parents at home and teachers at school. But Students will play a bigger role in their own development. And virtual communities on the Internet will create a new form of peer group affecting the mental and emotional growth of the children and young adolescents of the future. I say this, fully cognizant of both its upside and its downside. Perhaps we should be more open to what our children will have to tell us… Take the words of America’s Poet Laureate, Robert Frost:

Now I am old my teachers are the young.

What can’t be molded must be cracked and sprung.

I strain at lessons fit to start a suture.

I go to school to youth to learn the future.

-- Robert Frost

Methods of teaching in the last fifty years have been almost totally confined to formal instruction in classrooms. Lectures, tutorials and supervised work have been the staples of education from time immemorial. We have barely started to explore guided learning through such instruments as distance learning, the Open University and modular adult education classes. We have barely scratched the surface of the potential that exists in self learning. There is room to do much more in guided learning, and to help a thorough-going revolution in self-learning.

Although I believe that formal instruction will continue to be important, it will increasingly be supplemented by both guided learning and self-learning through myriad offerings. Driven by curiosity and self-interest, the lifelong learners of the future will alternate between broadening themselves or pursuing hobbies on the one hand, and acquiring marketable skills on the other. The offerings for both will be there.

The organizational setting, the schools and universities, will not be replaced by individuals working on computer terminals or on their mobile phones or other technologies, from home or from elsewhere. This is because they serve three functions: a skill and knowledge imparting function; a certification function; and a socialization function. The first and second will change along the lines I have just described. But the socialization function will remain.

Children need to be with other children of their age, to learn to interact and socialize with peers. Only schools provide the requisite setting for such socialization, an essential feature of emotional development and the formation of effective citizens.

III. THE IMPLICATIONS FOR MUSEUMS, LIBRARIES AND ARCHIVES:

The implications for libraries and museums are profound. Everything from storage to retrieval poses problems of technical and physical obsolescence. For despite its enormous convenience and its ability to expand our mental and physical reach in many innovative ways, the new digital technologies are quite susceptible to rapid obsolescence.

THE IMPLICATIONS FOR MUSEUMS

Museums will have to become much more than the storage place of rare originals and the general imparters of knowledge. Yes, there will always be that unique joy, the special feeling of awe that one has in being in the presence of the actual original piece of art or that rare object that makes has been recognized as worthy of being a “museum piece”. For specialists, there may well be additional and possibly profound insights that can be gained only by the examination of the original work. But museums deal with more than specialists; they have to cater to the needs and wants of the general public. They must take note of the fact that the web will provide excellent materials, in 3-D animations that will look very lifelike, and will provide access to many sources of information. Thus the displays of tomorrow will change. They will be more like curated shows, perpetually changing as the institution tries to reach the public in myriad ways. The skill of the curators will be apparent in the quality of the shows that they organize. So rather than standardized fare, we can expect that the museums of tomorrow will have perpetually changing displays, that make full use of the available technologies, but provide an added "oomph" that can only be provided by the size of the exhibition, the excellence of the space, the attractiveness of the surroundings, and the exciting manner in which the building itself provides a sense of place.

The Global Museums – A new reality:

Virtual museums can be created that allow the visitor to see and compare pieces that are currently at various museums around the world. In fact, there are some things that can be done virtually that cannot be done in a real museums with a real antique: turning the piece around and upside down to see all its facets, and in the future, the 3-D virtual reality character of the presentations will be much better than anything that we can envisage at present, far better that current technologies from holograms to 3-D animations.

As in the case of the WDL, we can already see some of the early manifestations of that in the Global Egyptian Museum (GEM), which is currently managed by the CULTNAT Center of the BA, where the ancient Egyptian artifacts of seven museums are already grouped and retrievable and comparable on computer screens everywhere. That is just a glimpse of what the future holds.

The actual museums will invariably hold combinations of digital presentations that will complement the viewing of the artifacts by placing them in the context that they were designed for and showing how they played a role in the context of the past. Or a combination of the global virtual museum of the future and the powerful new digital presentations will allow the scholar and the general public to both find enjoyment and knowledge in their museum visit.

THE FUTURE OF LIBRARIES:

The experience of the World digital library (WDL) shows glimpses of what the future may hold for libraries. This raises the question that if all the material will be presented in virtual forms and brought to us wherever we are, at home or at the office, what will become the purpose of the space we now call a library? There are at least five special functions that these new institutions of the third millennium will undertake:

First, they will continue to harbor the originals. Manuscripts and first editions will continue to work their fascination for us, as the objects -- above and beyond the content -- are seen to have intrinsic value and worth. Being able to consult them will confer on the visitor special joys and possible new insights.

Second, the library will become a meeting place for the like minded and the interested in particular themes. A treasured meeting place, evoking the past and surrounded by the treasures of our heritage, it will be an inspiring venue for the literati and for the public at large.

Third, there will continue to be certain materials that for institutional and monetary reasons will be beyond the reach of most people to get for a nominal fee, and that the libraries will be able to provide only in situ. In addition, the libraries will have an integrated infrastructure for researchers, artists and critics that will enable them to find in one place, with excellent services, the full gamut of the materials and facilities they need.

Fourth, the library will be the appropriate bridge between the population, and especially the researchers among that population, and the national and international archiving system. There, the sheer scale of the enterprise will pose particular problems, that are likely only to be addressed by libraries and archiving institutions.

Fifth, the library will continue to have special Programs that involve children, schools, youth and their parents in the magnificent enterprise of socialization and learning that will continue as long as societies continue to exist. Such an enterprise may change in content as the world around us evolves, especially in the radical manner that I have described, but it will continue nonetheless. The transition from childhood to adulthood involves more than skills transfer, it involves the learning of who we are and where we belong. Culture manifests itself at every turn. The institutions of culture will therefore be part of that future that we look to, as much as they have been part of our past.

Finally, libraries will become even more important in this period of boundless electronic information of enormously variable quality. Having too much information is as problematic as having too little for those who do not know their subject matter well. Libraries will help by organizing coherent domains of knowledge and sharing in the global explosion of information. They will not be just depositories of books and magazines, but will become essential portals through which learners – and the general public – will be helped to explore the vast and growing resources that will be at their fingertips.

The Digital Libraries Of Tomorrow

Libraries are a fundamental part of the cultural landscape of any country. They preserve the achievements of the past and provide access to that common heritage of humanity. They are fundamental components of the education and training system, and increasingly an important instrument for spreading the values of rationality, tolerance and the scientific outlook. Many libraries have important public outreach functions. However, they are also an essential part of the scientific research and development efforts that drive contemporary economic growth.

Less developed countries face problems of access to recent research (mostly in journals) and to reference material (mostly in libraries) and to data-bases (some of which are proprietary), all of which severely constrains their aspiring scientists or practicing researchers. The costs were too high for their national budgets, and frequently lack of foreign currency further limited their ability to purchase the needed materials, even for central institutions such as national or university libraries. This issue has been rapidly exacerbated in the last decade by the rapidly multiplying amounts of information, journals and publications. However, libraries are currently undergoing a major transformation in the wake of the digital revolution. Indeed, the digital revolution and the enormous advances in ICT have opened up opportunities for remedying this as never before.

The old approach to have books and other written materials collected in a useable fashion in fixed locations, where interested persons can have access to the materials has long suffered from several constraints:

- The huge costs involved in collecting the materials, cataloging and maintaining them

- The limited choice available in any one location

- The difficulty of accessing the material in the truly large collections (e.g. Library of Congress, BNF, the British Library, etc.) where the person who manages to get there physically and requests the book, has to wait as long as one hour, sometimes only to be told that it is being used by another reader.

These constraints of space and time are suddenly falling away in the wake of the Information and Communications Technology (ICT) revolution and the widespread use of new digital technology for the production and dissemination of the products of the human mind: text, data, music, image, voice… all are now unified in bits and bytes spelled in the language of ones and zeros.

The ICT breakthroughs, especially in terms of the connectivity that comes from the Internet and the ease of use of web-based interfaces, has revolutionized the practice of science. Material is instantaneously available to researchers everywhere at all times by posting on the web. Downloading is easy, and the work is commented on by many all over the world almost instantaneously.

Within this context, many questions arose for the idea of library and for the legal framework within which the utilization of the material takes place. The Intellectual Property Rights (IPR) regimes that we have come to know and use increasingly seemed under challenge as the libraries started moving towards hybrid systems where they continued the traditional functions of the lending or reference library of printed materials as well as the new functions of providers of on-line digital material.

The advantages of digital libraries based on the new technologies are manifest:

- Immediate and easy access to materials on-line 24 hours a day, 7 days a week;

- No need to physically be located at the location of the digital materials;

- Copies of the material available in any library can be made available to other libraries at almost no cost, and with the same quality as the original material;

- Searching for information is infinitely easier in the digital format;

- Keeping material up to date is no longer an issue especially for locations previously considered remote from the centers of publication and dissemination of knowledge;

- Thanks to the efforts led by the Carnegie-Mellon Foundation the back issues of scientific journals are presently being made available for free to the poor developing countries, a major gift, not sufficiently appreciated; and

- We now have enormous academic resources coming on line, as has been shown several years ago by MIT’s putting on-line the majority of its course materials.

But several problems are emerging:

- Problems of physical obsolescence of the material;

- Problems of technical obsolescence of the material;

- Problems of the establishment of common standards for the digitization, filing and maintenance of the material so that it can be easily accessed on a common basis; and

- The issue of IPR in the digital age.

The first three of these problems are being handled by a number of major libraries and archives that have a direct interest in establishing a proper system of managing digital resources that are growing much faster than anything we have experienced in human history. Already the amount of material produced on electronic form exceeds all that has been produced in written paper form and the volume is growing exponentially by as much as 10% per year or more.

A more complex issue is the management of IPR in the digital age. That was the topic of a major study entitled “the Digital Dilemma” issued by the US National Research Council in 2000, which carefully framed the issues but did not come out with firm recommendations on the most troubling of these issues. Specifically, the choice today is between those who would try to use the new technologies to maintain the system of “copyright” which has evolved during the long era of the print medium, and those who believe that the new digital materials require a different approach that is suited to the possibilities of the new technology. Copyright was a concept evolved in a time where the making of copies was the mechanism of reproduction and was tied to the labor and quality loss of making additional copies. Today’s digital technology is such that it effortlessly produces as many copies of identical quality effortlessly. Furthermore, these copies can be accessed from anywhere in the world where he Internet reaches. Thus new approaches, that would protect the rights of the innovators while allowing the convenience and simplicity of access to all, should be developed rather than trying to harness the new technologies (such as water-marking and other techniques) to protect the business model of “copyright” that evolved in a past era.

New technologies require new business models. The achievements of Henry Ford, Bill Gates and Michael Dell are examples of how the business model was suited to the technological innovation/change and thus worked well. In their cases, the adaptation of the business model to the new technology (the assembly line, the Operating System of the PC and the use of web based sales to cut out the distributor) is what allowed them to outdistance their rivals. Jeff Bezos and Amazon.Com revolutionized on-line retailing. Pierre Amidyar and the creation of e-Bay showed what the internet could do in facilitating transactions between sellers and buyers. Larry Page and Sergei Brin inventors of Google are other stellar examples of showing that you could provide a wonderful service for free and also generate lots of revenue in new ways (targeted advertising).